I’m reticent to use ‘no-code’ to describe any computing application. It’s a great term for the marketing of GUIs and visual programming environments to non-technical users but, much like labelling C# and C++ ‘no-binary’ because they are text-based, this ignores the reality of how our computers actually operate and the work of people who maintain the abstractions the vast majority of programmers rely on. From machine code and kernels to networking and graphics, most of us won’t consider every abstraction every day, only peering behind the curtains when we need to. In fact, there are a lot of developers whose work means they may never consider them at all - so why am I writing about them now?

A large majority of devices today have enough resources to simply consume whatever application a semi-competent programmer tells them to. Doing this on the web, we use frameworks that take advantage of libraries, that are overwhelmingly written in JavaScript, itself interpreted by a compiled C++ engine, that becomes machine instructions. These abstractions make developers’ lives easier and can be the most robust or efficient version of the work we want to do, but they can also lead to diminished responsibility on when and how to keep code efficient. The result of this is that opinions vary on whether work should happen on the client or on the server (jakelazeroff.com, overreacted.io).

These layers of abstraction matter because, while an overwhelming majority of users won’t care how their software works (just as long as it does), programmers need to pay attention to the compromises and benefits of each one as we accumulate more on top.

As devices like VR headsets, Microsoft Hololens, and Apple’s Vision Pro hit the consumer electronics market, it’s easy to think of the rise of ubiquitous computing as a march towards something out of Ready Player One, gluing us even more to our everyday devices. We’re not there (yet 😨) but chat interfaces like Gemini and ChatGPT are already replacing keyboards and touchscreens for a lot everyday searches. More broadly, they are used for data retrieval and generation. This way of interacting with software will become the norm and remain in use even after fully immersive experiences are common. I’d like to explore how I think both the combination of low-code systems and conversational interfaces, or rather agent-based interfaces, can be combined to empower end users with the greatest amount of flexibility in personal device functionality and provide greater levels of abstraction combined than either would alone.

Low-code systems

Low-code systems have their roots in the early days of computing when the need to simplify programming and make it more accessible became apparent. In the 1980s and 1990s, visual programming languages like HyperCard and Visual Basic emerged, allowing users to create applications through graphical interfaces rather than writing extensive lines of code. These early systems laid the groundwork for modern low-code platforms by demonstrating that software could be built with minimal coding, thus opening up development to a broader audience.

Modern platforms provide pre-built components, drag-and-drop interfaces, and integration capabilities, significantly reducing the time and effort required to develop software. Today, low-code systems are widely used to create internal company tools, automate workflows and processes, and develop customer facing applications. They have a wide variety of uses.

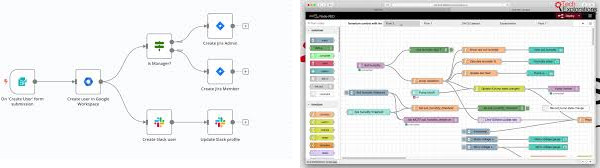

In the video game industry Scratch is used to teach programming using a low-code pseudo-code interface and the Unreal engine enables game creation using their flow-based ’Blueprints’ offering for features such as shaders, particle systems, dialogue flows, and character behaviour. In a similar domain, Blender implements a low-code interface for creating materials on 3D models. On smartphones, Automate and Apple Shortcuts allow users to create no-code automations for their devices. For general-purpose and business oriented use cases, managed platforms such as Outsystems, Appian, Mendix, Make, IFTTT, Zapier, Zoho Creator, and others, offer a variety of integrations with external services and the maintainers of the open-source NodeRED and n8n platforms also offer managed services for deploying low-code systems at enterprise scale. In the open-source space, flow-based interfaces are being used for machine learning with ComfyUI, image editing with GimelStudio, and an editor library with React Flow that is used downstream in other applications.

low-code interfaces - flow-based

low-code interfaces - flow-based

In a flow-based system, data moves sequentially from one block to the next, similar to functional programming. These interfaces are conceptually easier to understand as each block has a single input and output, making the flow of data straightforward and predictable. On the other hand, graph-based systems allow for more complex interactions where data can be pulled from previous blocks based on triggers and the flow of data is more granularly defined. This requires users to think more carefully about data types, structures, and flow so the trade-off between flow and graph based interfaces is whether the user or the system handles types & complexity. Both approaches have their merits and can be highly effective depending on the use case and target demographic. Flows are easier to start with, but graphs can be more robust. Personally, I’d like to see a hybrid mode offered, where users can opt-in to see type validation and separately opt-in to more complex graph structures. This would offer the most versatility from a low-code interface, but perhaps wouldn’t be user-friendly for beginners and therefore worthwhile to low-code platform developers.

Unreal blueprints - graph-based

Unreal blueprints - graph-based

My own attempt

I’ve been working on my own low-code system. It is far from complete and may never leave the ‘alpha’ stage of development, but it’s allowed me to target composable units of functionality and it’s forced me to think about the entirety of the software development lifecycle, algorithms, and the user experience of the full range of software developers, from those creating low-level code, to those who simply want to make their device “do a thing”. It’s an excellent learning exercise and has improved my knowledge of a variety web APIs.

Visual programming’s future

The abstraction level offered above text is thin as visual programming environments frequently fall back to it when they need to be extended. If your specific low-code platform provider™️ doesn’t have integration with the other services you use, the will to add them, or existing functionality blocks for you to work around those, then you will find yourself rolling some of your own code anyway.

Research for my masters degree revealed that two-thirds of technology professionals see the benefits of low-code platforms and that their usage is increasing because they can allow users and businesses to respond rapidly to requirement changes and accelerate digital transformations. Concerns still exist around vendor lock-in and issues with integration and interoperability; therefore the functionality available on each platform are an important consideration before businesses can commit to one. In addition to improved documentation, academics recommended joint efforts to standardize platforms in order to overcome these issues. I think this simply won’t to happen willfully since it reduces the incentive for a customer to pick your offering over another, the battle will be in the amount of integrations, cost, and ability to scale. For example, IFTTT supports 630 providers and executes actions over 1 billion times per month, they don’t want to lose that to a competitor.

An interesting area of the research covered technical vs non-technical users. Block-based interfaces have become widely used among non-programmers. However, no-code should not equate to no training when users first interact with the systems; users with an undergraduate level of programming experience made half as many mistakes when interacting with low-code systems than those without any. Non-technical users struggled to understand the purpose of condition blocks. This shows that there is still a need for programmers to be involved with low-code environments. This could be to verify processes, develop functionality that other users cannot, or simply to produce accessible documentation or examples on how to use it and the nodes within.

Low-code platforms can enable end-user developers to build their own software systems and automate business workflows with no or little programming knowledge. There is a focus on both commercial, residential and personal settings as LCDPs have proven to be beneficial in many areas of automation. Comprehensive documentation is a necessity in order for low-code to be adopted by non-technical users and a lack of standardization makes extending platforms difficult.

These findings are backed up more recently by Gartner’s 2024 Magic Quadrant analysis. They predict that 60% of software organizations will use enterprise low-code application platforms as their main internal platform in the near future (up from 10% in 2024) and that enterprise low-code platforms will be used for mission-critical application development in 80% of businesses globally (up from 15% in 2024). Their findings show a similar variety in the amount of service integrations as I saw in my research, but the prevalence of LLMs and conversational interfaces the world has seen since the report was published means that main UI for truly extensible systems has changed from being flow/graph based to chat-based. Natural language can now be used to replace or augment the blocks seen in low-code systems.

Agent-based systems

Once you give an LLM the ability to run in the background and interact with its environment in some way it becomes an LLM agent. Agents operate based on predefined rules and can adapt to changes in their environment, communicate with other agents, and make decisions autonomously. This approach allows for the simulation of intricate systems and processes, providing insights into how individual actions can lead to emergent behaviors and outcomes in larger systems.

Completely frictionless interaction with technology like this excites me because of the positive impact it can enable rather than the humanity we’re at risk of it replacing. The energy use required to train LLMs is a massive problem, as are the privacy concerns that come with data scraping by companies for training and job losses where activities previously done by human people are replaced by machine learning models. I’ll always advocate for ethically, legally sourced training data and, where it makes sense, locally run models. I’m also cautiously optimistic that increased energy demand from LLM usage could lead to a greater push for green energy sources. Given that LLMs are being integrated in many places that make sense as well as many that don’t, I want my focus to be on how increased extensibility can empower everyday users (including me!) to act with more intention when using them and the rest or their technology; The model context protocol offers a way to do that.

The Model Context Protocol

The Model Context Protocol (MCP) is described on its website as “an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications … MCP provides a standardized way to connect AI models to different data sources and tools” - and that idea fits really well with where I want to see software go. Since the entry point of software systems will become chat and voice-based conversational interfaces, MCP is well positioned to succeed with its three current capabilities:

- Resources: File-like data that can be read by clients (like API responses or file contents)

- Tools: Functions that can be called by the LLM

- Prompts: Pre-written templates that help users accomplish specific tasks

What this does for low-code software is move the interface between functional blocks from user-defined connections into the latent space of your model where, as long as it doesn’t hallucinate, you or your agent can take actions on structured information without having to worry about the complexities of graphing connections and data types between services. Of course, this is because data types have already been handled by a software developer to create the MCP, but a common API like this still lowers the barriers to inter-system interaction.

Why care about visual programming at all?

Apple’s Siri assistant waveform

Apple’s Siri assistant waveform

In the near future we’ll see more pretty waveforms as the primary pattern for voice-based conversational interfaces, falling back to text-based chat interstitched with images and video when simply talking doesn’t cut it. Beyond that, I fear the interfaces will keep moving towards uncanny simulacra. It seems inevitable that at some point both types of conversational interface will become expected in place of icons and traditional apps at for swaths of daily smart devices needs. However in the same way that current visual programming is built on and falls back to text-based programming and that that itself relies on binary encoding and the abstractions below, there will always be a place for the ‘power-user UI’ with more advanced features. This is because the easier an interface is to use, the less it typically allows you to do; though maybe LLMs & MCP will change that?

In an ideal world LLMs would have used licensed, ethically sourced data, been trained inside renewable-powered data centers, and all be published with provenance for who operates them and how. For the world at large that’s demonstrably not been done, so I guess I’m still optimistic for a future where it could be and the silver linings we can pick up along the way.. 😅 In software engineering, it’s important to make code testable (predictable & repeatable) and the same is true for these highly-abstracted applications too. An agent taking actions and making changes within a black-box, latent space can make them hard to use and also hard to rely on when you want to take complex, repeated actions. The solution for making LLM agents both extensible and reliable, without relying on carefully crafted prompts, is not to fallback to text-based programming (possibly generated by the same agent) that everyday users may not understand, but to provide a visual programming environment instead. To save effort with the implementation, MCP could even be used for both. This combination of abstractions allows users with any level of ability to make use of LLM agents and have confidence that the low-code tasks they configure will behave as expected.

Activepieces is one system that implements this. It’s under heavy development and uses a freemium model where the low-code elements are open-source but a paid account is required to access MCP features. It is not a ready-to-go solution for everyone for this reason. However, it’s still a great, real example of what I think configurable, extensible software interfaces could be.